AI Inference Market Snapshot

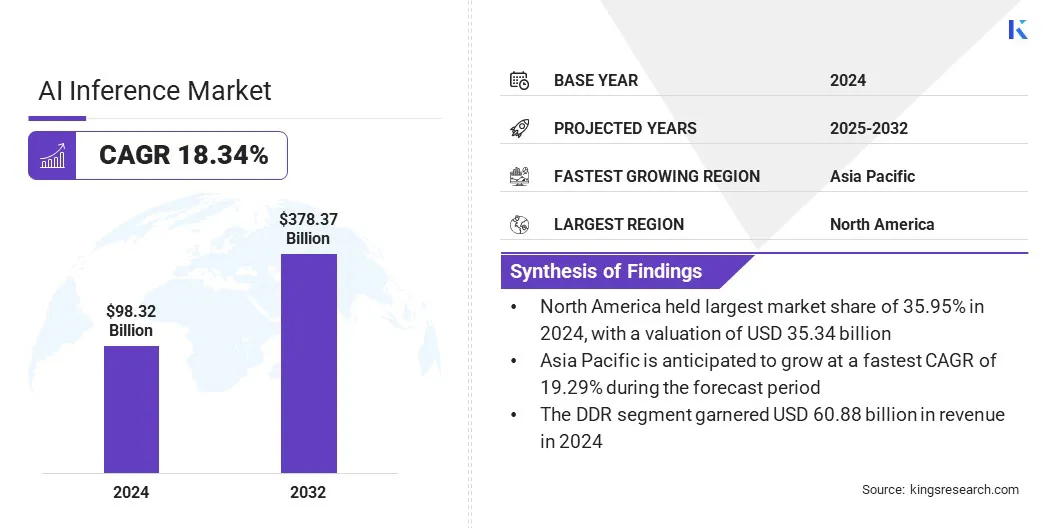

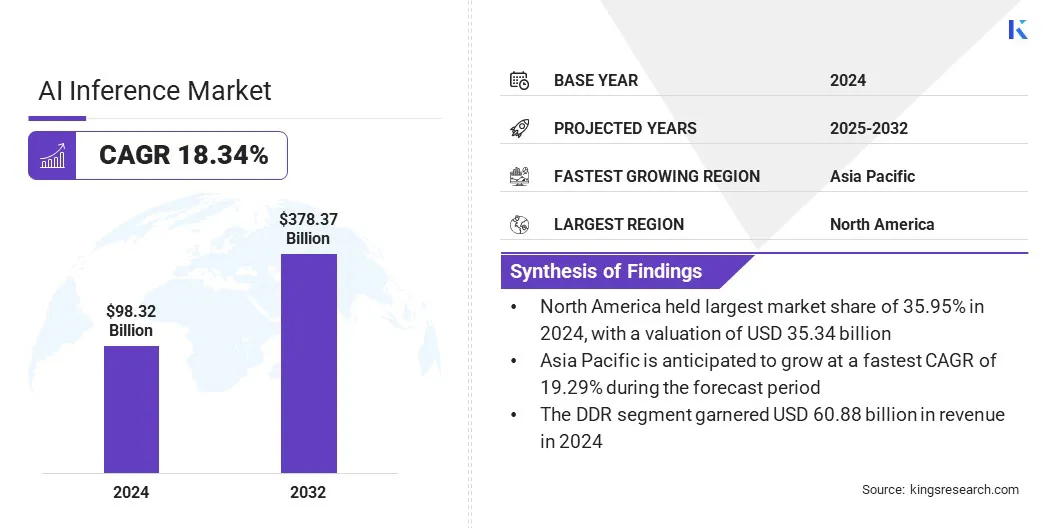

The global AI inference market size was valued at USD 98.32 billion in 2024 and is projected to grow from USD 116.30 billion in 2025 to USD 378.37 billion by 2032, exhibiting a CAGR of 18.34% during the forecast period. The market is experiencing robust growth, propelled primarily by the rapid proliferation of generative AI applications across diverse industries.

As enterprises increasingly deploy AI models for tasks such as content generation, real-time translation, and personalized recommendations, the demand for efficient, high-performance inference solutions has surged.

Key Market Highlights:

- The global market size was valued at USD 98.32 billion in 2024.

- The market is projected to grow at a CAGR of 18.34% from 2025 to 2032.

- North America held a share of 35.95% in 2024, valued at USD 35.34 billion.

- The GPU segment garnered USD 27.61 billion in revenue in 2024.

- The DDR segment is expected to reach USD 228.57 billion by 2032.

- The cloud segment is projected to generate a revenue of USD 151.53 billion by 2032.

- The generative AI segment is expected to reach USD 136.69 billion by 2032.

- The enterprises segment is estimated to reach USD 164.68 billion by 2032.

- Asia Pacific is anticipated to grow at a robust CAGR of 19.29% over the forecast period.

Major companies operating in the AI inference industry are OpenAI, Amazon.com, Inc., Alphabet Inc, IBM, Hugging Face, Inc., Baseten, Together Computer Inc, Deep Infra, Modal, NVIDIA Corporation, Advanced Micro Devices, Inc., Intel Corporation, Cerebras, Huawei Investment & Holding Co., Ltd., and d-Matrix, Inc.

AI Inference Market Overview

The increasing emphasis on data sovereignty and regulatory compliance is influencing enterprise demand for AI inference solutions. Organizations increasingly prefer inference services that deliver real-time performance with complete control over data and infrastructure.

- In June 2025, Gcore and Orange Business launched a strategic co-innovation program to deliver a sovereign, production-grade AI inference service. The solution combines Gcore’s AI inference private deployment service with Orange Business’ trusted cloud infrastructure, enabling enterprises to deploy real-time, compliant inference workloads at scale across Europe, with a focus on low-latency performance, regulatory compliance, and operational simplicity.

Market Driver

Proliferation of Generative AI Applications

The market is experiencing rapid growth, propelled by the proliferation of generative AI applications. As organizations increasingly deploy large language models, generative design tools, virtual assistants, and content creation platforms, the need for fast, accurate, and scalable inference capabilities has intensified.

These generative applications demand high-throughput performance to process vast and complex datasets while delivering real-time, contextually relevant outputs. To address these requirements, businesses are adopting advanced inference hardware, optimizing software stacks, and utilizing cloud-native infrastructure that supports dynamic scaling.

This surge in generative AI use across sectors such as healthcare, finance, education, and entertainment is transforming digital workflows and accelerating the demand for high-performance inference solutions.

- In April 2025, Google introduced Ironwood, its seventh-generation TPU, designed specifically for inference. Ironwood supports large-scale generative AI workloads with enhanced compute power, memory, and energy efficiency. It integrates Google's Pathways software and features improved SparseCore and ICI bandwidth, enabling high-performance and scalable inference for advanced AI models across various industries.

Market Challenge

Scalability and Infrastructure Challenges in AI Inference

A major challenge impeding the progress of the AI inference market is achieving scalability and managing infrastructure complexity. As organizations increasingly adopt AI models for real-time, high-volume decision-making, maintaining consistent performance across distributed environments becomes difficult.

Scaling inference systems to meet fluctuating demand without overprovisioning resources or compromising latency is a persistent concern. Additionally, the complexity of deploying, managing, and optimizing diverse hardware and software stacks across hybrid and multi-cloud environments adds operational strain.

To address these challenges, companies are investing in dynamic infrastructure solutions, including serverless architectures, distributed inference platforms, and automated resource orchestration tools.

These innovations enable enterprises to scale inference workloads efficiently while simplifying infrastructure management, thus supporting broader AI adoption across various industries.

- In December 2024, Amazon introduced a new “scale down to zero” feature for SageMaker inference endpoints. This feature allows endpoints to automatically scale to zero instances during inactivity, optimizing resource management and cost efficiency for cloud-based AI inference operations.

Market Trend

Enabling Real-Time Intelligence with Hybrid Cloud Inference

The market is witnessing a growing trend toward hybrid cloud-based inference solutions, supported by the rising demand for scalability, flexibility, and low-latency performance.

As companies deploy AI models across diverse geographies and use cases, hybrid architectures integrating public cloud, private cloud, and edge computing facilitate the dynamic distribution of inference workloads.

- For instance, in June 2025, Akamai introduced its AI Inference platform integrated with SpinKube and WebAssembly to enable low-latency model deployment at the edge. Running on a globally distributed cloud infrastructure, the platform supports lightweight, domain-specific AI models for real-time applications, reflecting a shift from centralized training to distributed AI inference across hybrid cloud-edge environments.

This approach allows data processing closer to the source, improving response times, ensuring regulatory compliance, and optimizing cost by distributing workloads between centralized and edge nodes. Hybrid cloud inference is increasingly vital for supporting real-time AI applications and advancing innovation.

AI Inference Market Report Snapshot

|

Segmentation

|

Details

|

|

By Compute

|

GPU, CPU, FPGA, NPU, Others

|

|

By Memory

|

DDR, HBM

|

|

By Deployment

|

Cloud, On-premise, Edge

|

|

By Application

|

Generative AI, Machine Learning, Natural Language Processing, Computer Vision

|

|

By End User

|

Consumer, Cloud Service Providers, Enterprises

|

|

By Region

|

North America: U.S., Canada, Mexico

|

|

Europe: France, UK, Spain, Germany, Italy, Russia, Rest of Europe

|

|

Asia-Pacific: China, Japan, India, Australia, ASEAN, South Korea, Rest of Asia-Pacific

|

|

Middle East & Africa: Turkey, U.A.E., Saudi Arabia, South Africa, Rest of Middle East & Africa

|

|

South America: Brazil, Argentina, Rest of South America

|

Market Segmentation

- By Compute (GPU, CPU, FPGA, NPU, and Others): The GPU segment earned USD 27.61 billion in 2024, mainly due to its superior parallel processing capabilities, making it ideal for high-performance AI workloads.

- By Memory (DDR and HBM): The DDR segment held a share of 61.92% in 2024, fueled by its widespread compatibility and cost-effectiveness for general AI inference tasks.

- By Deployment (Cloud, On-premise, and Edge): The cloud segment is projected to reach USD 151.53 billion by 2032, owing to its scalability, flexibility, and access to robust AI infrastructure.

- By Application (Generative AI, Machine Learning, Natural Language Processing, and Computer Vision): The generative AI segment is projected to reach USD 136.69 billion by 2032, owing to rising adoption across content creation, coding, and design applications.

- By End User (Consumer, Cloud Service Providers, and Enterprises): The enterprises segment is projected to reach USD 164.68 billion by 2032, propelled by the growing integration of AI into business operations, analytics, and automation strategies.

AI Inference Market Regional Analysis

Based on region, the market has been classified into North America, Europe, Asia Pacific, Middle East & Africa, and South America.

North America AI inference market accounted for a substantial share of 35.95% in 2024, valued at USD 35.34 billion. This dominance is reinforced by the rising adoption of edge AI inference across sectors such as automotive, smart devices, and industrial automation, where ultra-low latency and localized processing are becoming operational requirements.

The growing availability of AI-as-a-Service platforms is also reshaping enterprise AI deployment models by offering scalable inference without dedicated infrastructure.

- For instance, in December 2024, Amazon Web Services (AWS) invested USD 10 billion n Ohio to expand its cloud and AI infrastructure. The investment aims to establish new data centers to meet rising demand , while also supporting technological advancement and strengthening Ohio’s role in the digital economy.

This development strengthens the AI inference ecosystem by expanding cloud-based AI capabilities in the region. As enterprises increasingly rely on robust cloud infrastructure to deploy inference models at scale, these investments are expected to accelerate innovation and adoption across sectors, reinforcing North America’s leading position.

The Asia-Pacific AI inference industry is expected to register the fastest CAGR of 19.29% over the forecast period. This growth is primarily attributed to the rising adoption of AI-powered technologies across key verticals, including manufacturing, telecommunications, and healthcare.

The increasing demand for real-time, low-latency decision-making is boosting the deployment of edge AI inference solutions, particularly within smart manufacturing ecosystems and robotics applications. Furthermore, ongoing government-led digitalization programs and strategic efforts to strengthen domestic AI capabilities are fostering a conducive environment for scalable AI deployment.

- In June 2025, SK Group and Amazon Web Services entered a 15-year strategic partnership to build an AI data center in Ulsan, South Korea. The collaboration aims to establish a new AWS AI Zone featuring dedicated AI infrastructure, UltraCluster networks, and services such as Amazon SageMaker and Amazon Bedrock to support the development of advanced AI applications locally.

Regulatory Frameworks

- In the U.S., the Federal Trade Commission (FTC) and the Food and Drug Administration (FDA) regulate artificial intelligence, with the FTC overseeing its use in consumer protection and the FDA governing its application in medical devices.

Competitive Landscape

The AI inference market is characterized by continuous advancements in engine optimization and a growing shift toward open-source, modular infrastructure.

Companies are prioritizing the refinement of inference engines to enable faster response times, lower latency, and reduced energy consumption. These enhancements are critical for scaling real-time AI applications across cloud, edge, and hybrid environments.

The industry is witnessing rising adoption of open-source frameworks and modular system architectures that allow for flexible, hardware-agnostic deployments. This approach empowers developers to integrate customized inference solutions tailored to specific workloads while optimizing resource utilization and cost-efficiency.

These advancements are enabling greater scalability, interoperability, and operational efficiency in delivering enterprise grade AI capabilities.

- In June 2025, Oracle and NVIDIA expanded their collaboration to enhance AI training and inference capabilities by making NVIDIA AI Enterprise natively available through the Oracle Cloud Infrastructure Console. This integration enables customers to access over 160 AI tools, including optimized inference microservices, and leverage NVIDIA GB200 NVL72 systems for high-performance, scalable, and cost-efficient AI deployments across distributed cloud environments.

- In May 2025, Red Hat introduced the Red Hat AI Inference Server, built on the open-source vLLM project and enhanced with Neural Magic technologies. The platform is designed to deliver high-performance, cost-efficient AI inference across hybrid cloud environments, supporting generative AI models on any accelerator.

Key Companies in AI Inference Market:

- OpenAI

- com, Inc.

- Alphabet Inc

- IBM

- Hugging Face, Inc.

- Baseten

- Together Computer Inc

- Deep Infra

- Modal

- NVIDIA Corporation

- Advanced Micro Devices, Inc.

- Intel Corporation

- Cerebras

- Huawei Investment & Holding Co., Ltd.

- d-Matrix, Inc.

Recent Developments (Partnerships/Product Launches)

- In May 2025, OODA AI partnered with Phala Network to explore the integration of confidential AI inference using trusted execution environments and decentralized GPU infrastructure. The collaboration focuses on building a privacy-preserving, verifiable AI inference network, leveraging zero-knowledge proofs and blockchain-based confidential computing technologies.

- In January 2025, Qualcomm Technologies, Inc. launched the AI On-Prem Appliance Solution and AI Inference Suite. The offerings enable on-premises deployment of generative AI and computer vision workloads, allowing enterprises to maintain data privacy, reduce operational costs, and deploy AI applications locally across industries with support from Honeywell, Aetina, and IBM.

- In January 2025, Novita AI partnered with vLLM to enhance AI inference capabilities for large language models. The collaboration enables developers to deploy open-source LLMs, such as LLaMA 3.1, using vLLM’s PagedAttention algorithm on Novita AI’s GPU cloud infrastructure, improving performance, reducing costs, and advancing open-source AI development.

- In August 2024, Cerebras Systems launched Cerebras Inference, an AI inference solution capable of delivering up to 1,800 tokens per second. Powered by the Wafer Scale Engine 3, the solution offers significantly lower costs and higher performance than GPU-based alternatives, with Free, Developer, and Enterprise pricing tiers.